ALL NEWS

Date: March 19, 2026

Time: 14:30, Room A102

Title: Machine learning as part of civic tech case studies: How to leverage machine learning and data mining techniques when studying software production.

Abstract

Machine learning and data mining techniques have been an established part of quantitative and mixed-methods research for decades. However, the people who develop state-of-the-art machine learning techniques and the people who apply them tend to be siloed.

Children learn recognizing animals, their properties and counting early on, along with spelling words and humming animal sounds. It is challenging at the beginning. They play and learn the names of animals, their shapes, their edges, the sounds they make, and what they do for a living. Their parents challenge them with questions like “Where is the cow?”, “Whose sound is that?”, “How many chicks are there?”, “Where are the black sheep?”, and the children have to connect all those concepts through language, vision, sound, among others. Eventually, they get very good at it and make their parents happy.

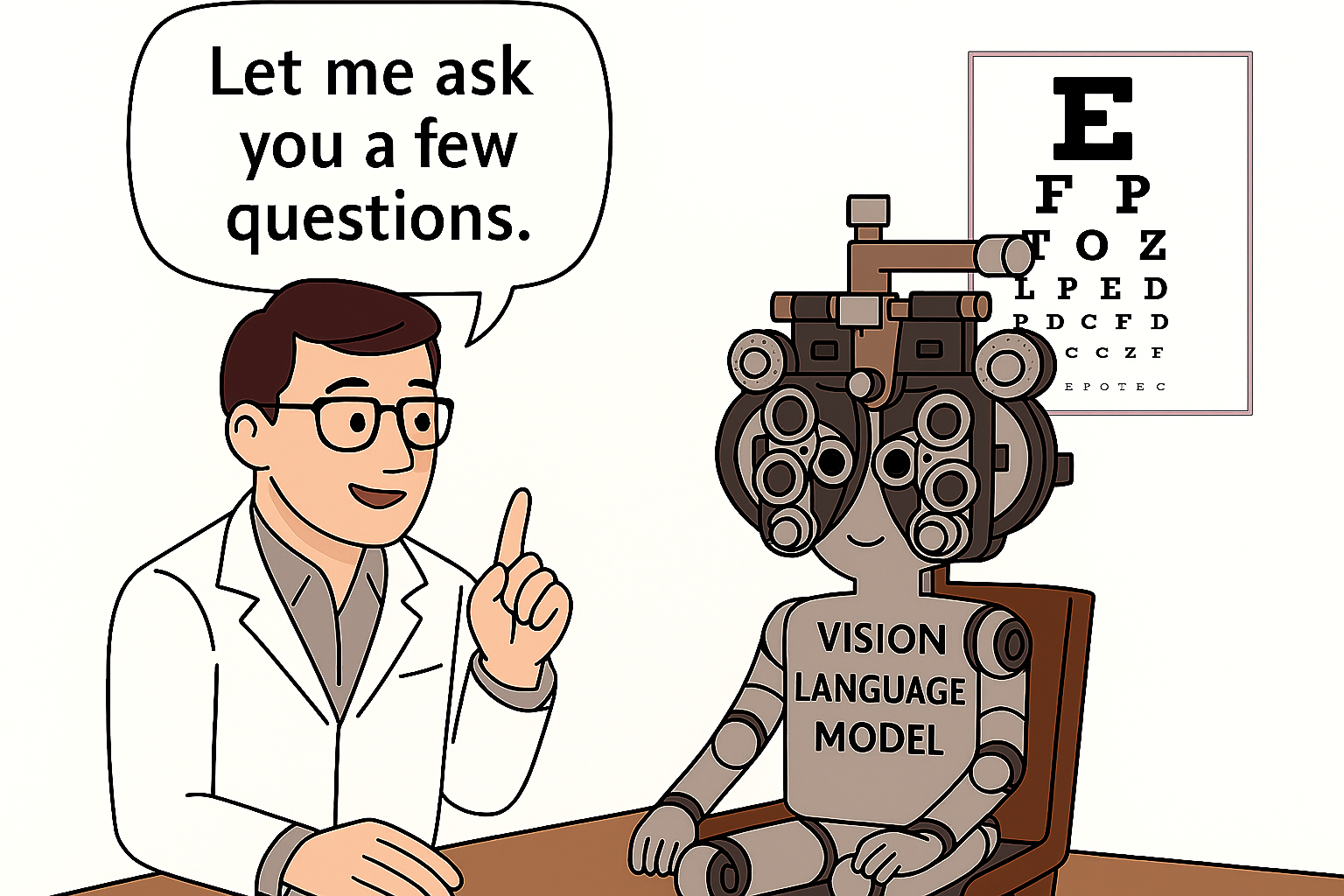

Vision-Language Models (VLMs), offspring of the Generative AI era, have amazed us with their ability to answer visual questions, generate images, and even navigate environments.

Now that most students are using generative AI in any form to deliver essays, code and more, educators and a few research scholars are asking “Is that doing any good? Is that empowering them and giving them more knowledge and ability to execute responsibly ? “. Consider that, over the last three years, since the advent of generative AI tools, educational institutions have taken two distinct approaches. In one camp, they fully adopted without knowing their impact and following the general hype. In the other camp, which is shrinking as we speak, they failed to build fences around, without knowing what to fence.

June 2025

Up until a few years ago nobody would expect computer vision systems to be able to talk. Up until then they would be buried in systems with limited capabilities and transparency. Recently, we are experimenting with so-called Vision Language Models. Researchers’ ambition is to integrate the vision and language skills of AI systems towards a multimodal artificial intelligence.

At the Signals and Interactive Systems Lab (University of Trento, Italy) we are looking for highly motivated and talented graduate students to join our research team and work on Conversational Artificial Intelligence. This umbrella term includes the following research areas:

- Natural Language Processing

- Dialogue Modeling and Systems

- Machine Learning

- Affective Computing

We are investigating and designing next-generation ML models for multimodal input /output processing in physical and hybrid environments and interactions.

23 June 2025 at 11:00 AM

Ferrari 1 Building, Via Sommarive 5, Povo (Trento)

Room n. 259, Augmented Health Environments Lab

Speaker: Dr. Firoj Alam (firojalam.one)

Abstract

Robust benchmarking is essential for understanding and advancing the capabilities of large language models (LLMs) across diverse linguistic and cultural landscapes. This talk explores the critical role of benchmarks in evaluating LLMs’ proficiency in native, local, and cultural knowledge, with a particular emphasis on multilingual and dialectal challenges.

May 2025

Due to easy-to-use language-based systems, generative AI has taken center stage in many industry sectors in the last few years. Neophytes and practitioners in public and private organizations from different company functions have been asked to work differently and create new products and services using intuitive and programmable interfaces. In the meantime, AI researchers have unveiled critical limitations of the deep-learning models that could hinder their inclusion in innovation processes, products, and services.

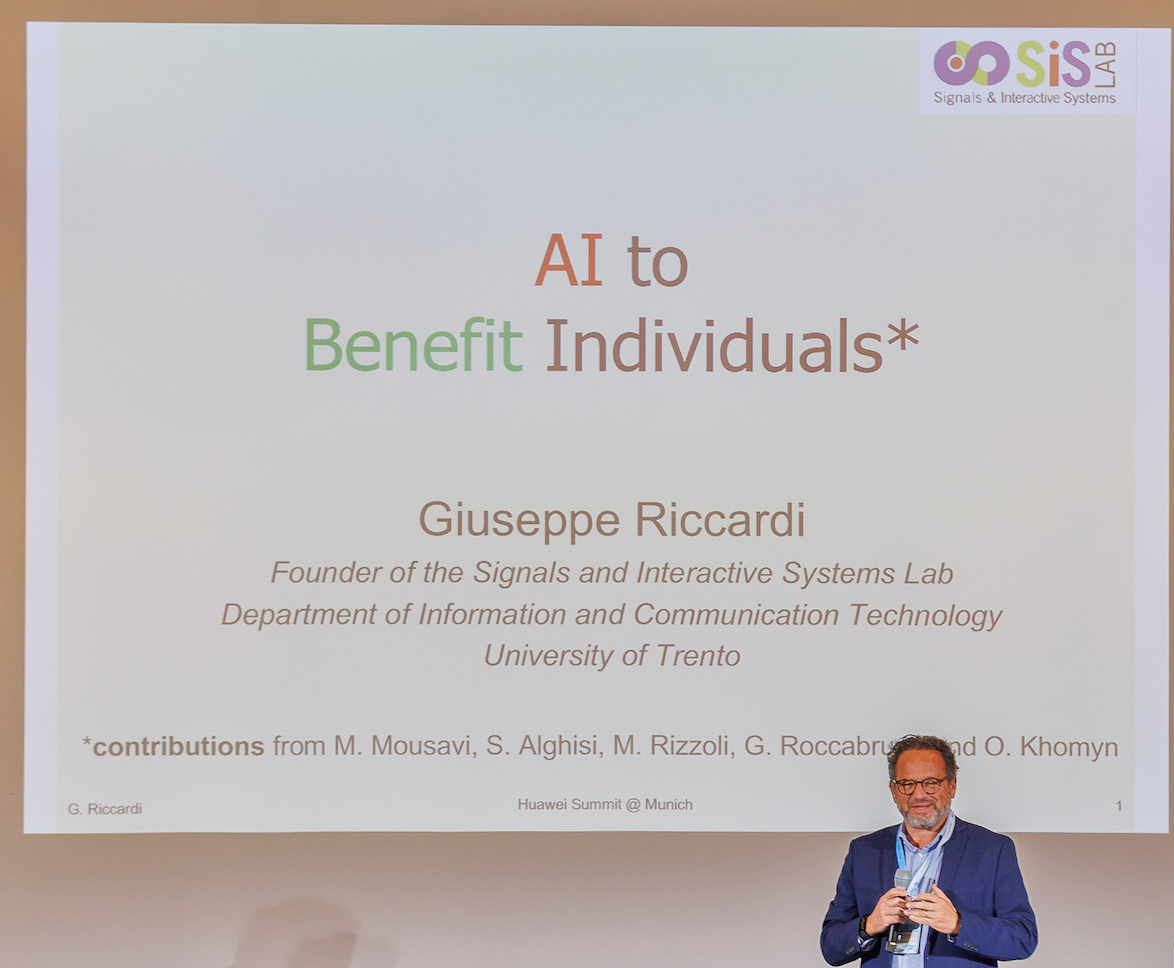

This talk will review the current state of the art in generative AI models, their severe limitations, and future challenges. Beneficial technology and systems must be created ab initio. We will give insights into how to create fundamental research challenges while pursuing innovation in AI system training and development.

March 2025

The Evocativity of Prediction, LLMs and DeepSeek

In December 2022, I got an unprecedented number of calls and messages from friends, entrepreneurs, students, and colleagues asking me if I knew about language models and urging me to meet them. They said: “I type, and the language model (i.e. ChatGPT) predicts and completes my sentences and requests !”. Mixed senses of void, excitement, and agitation were spreading fast. The term “language model” was popularized in a blast all over the planet. How did we get here?

In the early nineties, we witnessed the dawn of statistical language models. At that time, when I talked about our research on “stochastic language models” at AT&T Bell Laboratories (USA), people would scoff at that idea. We wanted to use them to empower machines to talk to humans. The topic was controversial then, and linguists criticized and ridiculed our research path. In 2000, at AT&T Labs, we had an aha moment when we connected a machine that could listen and talk to millions of customers calling with accents from all over the USA. It was an instant research and technological breakthrough, appreciated by the scientific and business communities, with little impact on the broad audience of consumers.

Fast forward in time: in 2011...

4 June 2025, 11:30 AM

Ferrari 1 Building, Via Sommarive 5, Povo (Trento)

Room n. 259, Augmented Health Environments Lab

Speaker: Koichiro Yoshino (Institute of Science Tokyo)

Abstract

With advances in core technologies of spoken dialogue systems, such as automatic speech recognition (ASR) and dialogue generation, real-world dialogue agents are becoming feasible. However, there are several issues on working such systems in real-time, and real-world. Cascaded systems often suffer from significant latency in each module, resulting in interactions that are far from natural human dialogue. Recent research has begun to focus on real-time dialogue systems, such as Voice Activity Projection (VAP) and Moshi. While fast systems that predict turn-taking using acoustic cues have been widely studied, it is equally important to explore slower systems that anticipate dialogue based on the content of utterances.

In this talk, we introduce ongoing research toward real-time dialogue systems and present approaches that integrate both real-world robotic dialogue and insights from human cognitive mechanisms.

The MIT-IBM Watson AI Lab, a joint academic-industry research initiative, advances AI through foundational and applied work, emphasizing AI science for real-world impact. This talk outlines the Lab's mission, impact, and project portfolio before highlighting work from several Lab projects that focus on methods to efficiently enhance large language models (LLMs). This includes (1) disentangling knowledge from skills via synthetic data, enabling targeted skill integration; (2) training LLMs to self-report uncertainty for reliability; (3) creating a modular library of intrinsic capabilities as low-rank adapters (LoRAs) for parameter-efficient customization; and (4) scalable serving infrastructure for dynamic multi-adapter deployment. Together, these approaches enable efficient injection of specialized skills into LLMs while preserving core functionality and reducing computational costs. The discussion highlights their potential to democratize advanced AI capabilities and accelerate deployment across domains, exemplifying the Lab’s commitment to AI science for real-world impact.

Today was a big feel-good day for me and my colleagues Giovanni Iacca and Eleonora Aiello from the Department of Computer Science and Information Engineering at the University of Trento.

Our students' presentations delved into specific aspects of how medical professionals, from ophthalmology, neurosurgery, cardiology, radiology, endovascular surgery, and health physics, could benefit from AI systems.

They showcased how AI may help reduce the burnout of radiologists, support neurosurgeons in improving the precision in delivering brain stimulation for Parkinson's patients, detect postoperative delirium, improve the tracking of white matter pathways, predict toxicity in radiotherapy, and many more high-impact issues in medicine and health.

Go students, enjoy the rest of the ride!

Research in human-machine dialogue (aka ConvAI) has been driven by the quest for open-domain, knowledgeable and multimodal agents. In contrast, the complex problem of designing , training and evaluating a conversational system and its components is currently reduced to a) prompting LLMs, b) coarse evaluation of machine responses and c) poor management of the affective signals. In this talk, we will review the current state-of-the-art in human-machine dialogue research and its limitations. We will present the most challenging frontiers of conversational AI when the objective is to create personal conversational systems that benefit individuals. In this context we will report experiments and RCT trials of so-called personal healthcare agents supporting individuals and healthcare professionals.

Longitudinal Dialogues (LD) are the most challenging type of conversation for human-machine dialogue systems. LDs include the recollections of events, personal thoughts, and emotions specific to each individual in a sparse sequence of dialogue sessions. Dialogue systems designed for LDs should uniquely interact with the users over multiple sessions and long periods of time (e.g. weeks), and engage them in personal dialogues to elaborate on their feelings, thoughts, and real-life events.

In this talk, the speaker presents the process of designing and training dialogue models for LDs, starting from the acquisition of a multi-session dialogue corpus in the mental health domain, models for user profiling, and personalization, to fine-tuning SOTA Pre-trained #Language Models and the evaluation of the models using human judges.

The Laboratory for Augmented Health Environments will develop data, AI and robotics-driven prototypes and train future surgeons and healthcare professionals. A collaboration of the University of Trento with the School of Medicine and the Regional Healthcare Services

Ore 17:00 - 19.00 | lunedì 13 novembre 2023

Introduce e modera:

Paola Iamiceli, Università di Trento

Ne discutono:

Marco Zenati, Harvard Medical School e UniTrento;

Cesare Hassan, Hunimed; Marco Francone, Hunimed;

Carlo C. Quattrocchi, UniTrento e APSS; Lorenzo Luciani, APSS;

Andrea Passerini, UniTrento; Giuseppe Riccardi, UniTrento;

Eleonora Marchesini, FBK; Roberto Carbone, FBK;

Marta Fasan, UniTrento